|

|

1 year ago | |

|---|---|---|

| assets | 1 year ago | |

| data | 1 year ago | |

| docs | 1 year ago | |

| .gitignore | 1 year ago | |

| LICENSE | 1 year ago | |

| README.org | 1 year ago | |

| dailies-overlayed.py | 1 year ago | |

| dailies-side-by-side.py | 1 year ago | |

| daily-breakdowns.py | 1 year ago | |

| daily-comparisons.py | 1 year ago | |

| daily-totals.py | 1 year ago | |

| lm1-hourly-totals.py | 1 year ago | |

| lm2-hourly-totals.py | 1 year ago | |

| requirements.txt | 1 year ago | |

| separator.sh | 1 year ago | |

| sql-statements.sql | 1 year ago | |

| totalilator.sh | 1 year ago | |

README.org

Ritherdon Charts

This project assumes you have knowledge of:

Summary

Here lies a loose collection of Bash and Python scripts to process data collected by the Personal Flash in Real-Time artworks. They were part of the No Gaps in the Line exhibition by Nicola Ellis, hosted at Castlefied Gallery in Manchester, U.K.

This project ties into a larger collection of software projects related to the Personal Flash in Real-Time artworks. And, those artworks are a small piece of the much larger Return to Ritherdon project (devised and completed by Nicola Ellis). For more information on the artworks and where they sit in the larger project, please use the links below:

- rtr-docs (The documentation repository for all the Person Flash in Real-Time software projects, with an overview of how they tie into the Return to Ritherdon project)

- Return to Ritherdon Org. Page (The 'home page' for the Return to Ritherdon project on this site, containing a list of all the publicly available repositories)

Before continuing, I thought it would be appropriate to briefly mention who/what Ritherdon is. It is a business/factory in Darwen, U.K. and specialises in manufacturing electrical enclosures and other related products. So, if you have spent any time in the U.K. and seen one of those green electrical boxes lurking on a street corner, there is a good chance these folks made it.

NOTE: This project does not contain documentation in the rtr-docs repository. This is a self-contained mini-project which is not directly related to the Personal Flash in Real-Time artworks.

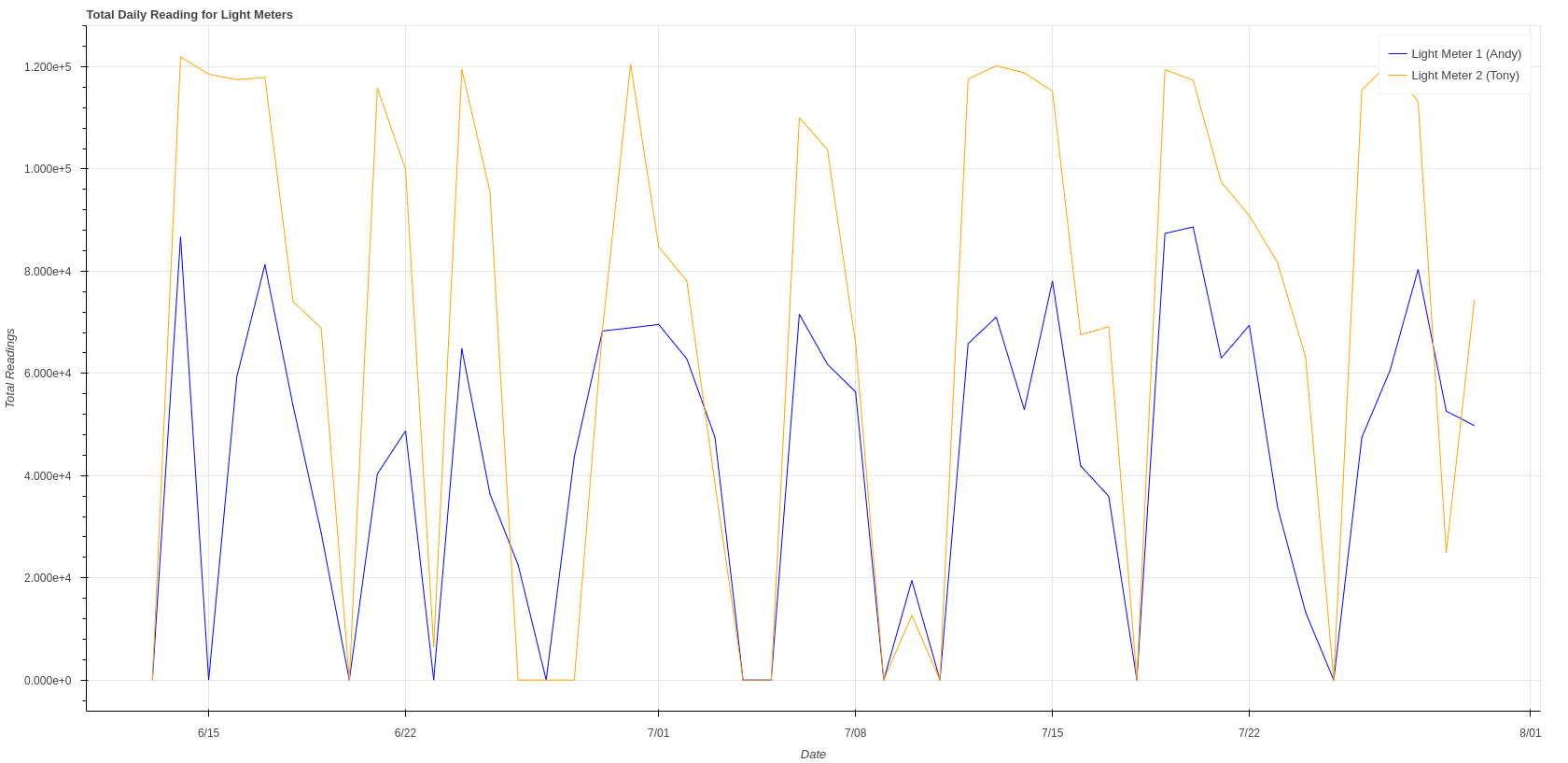

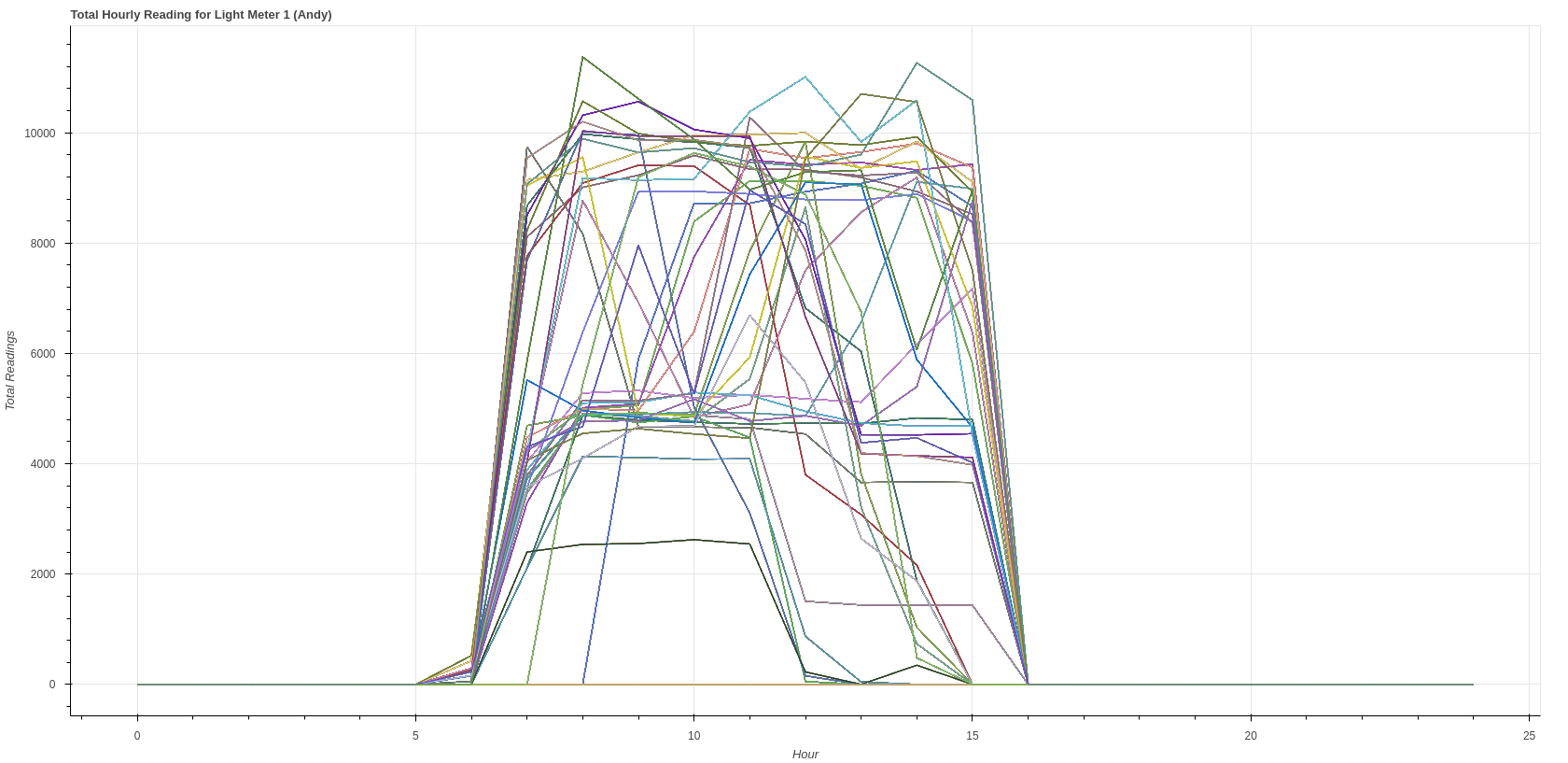

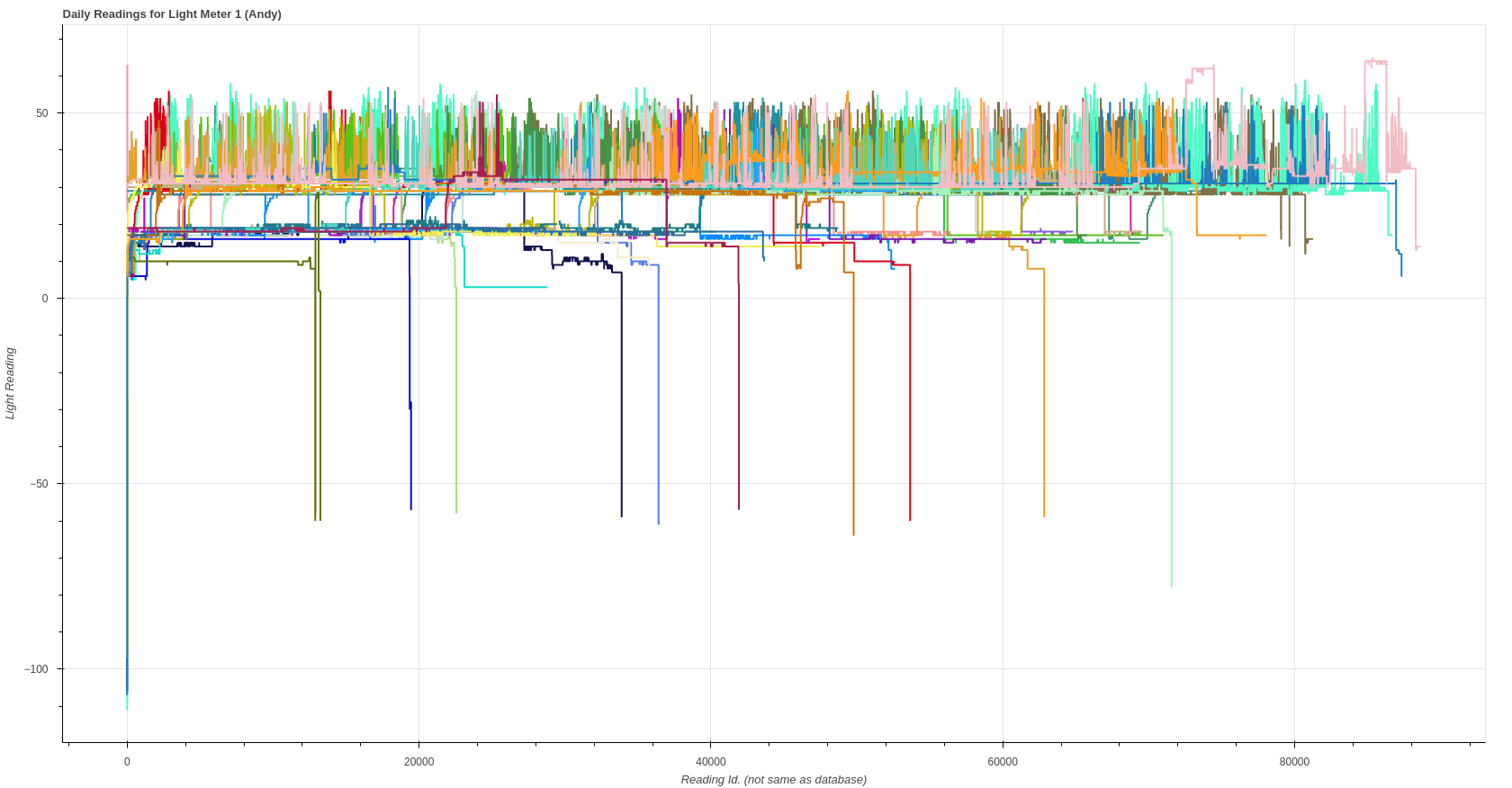

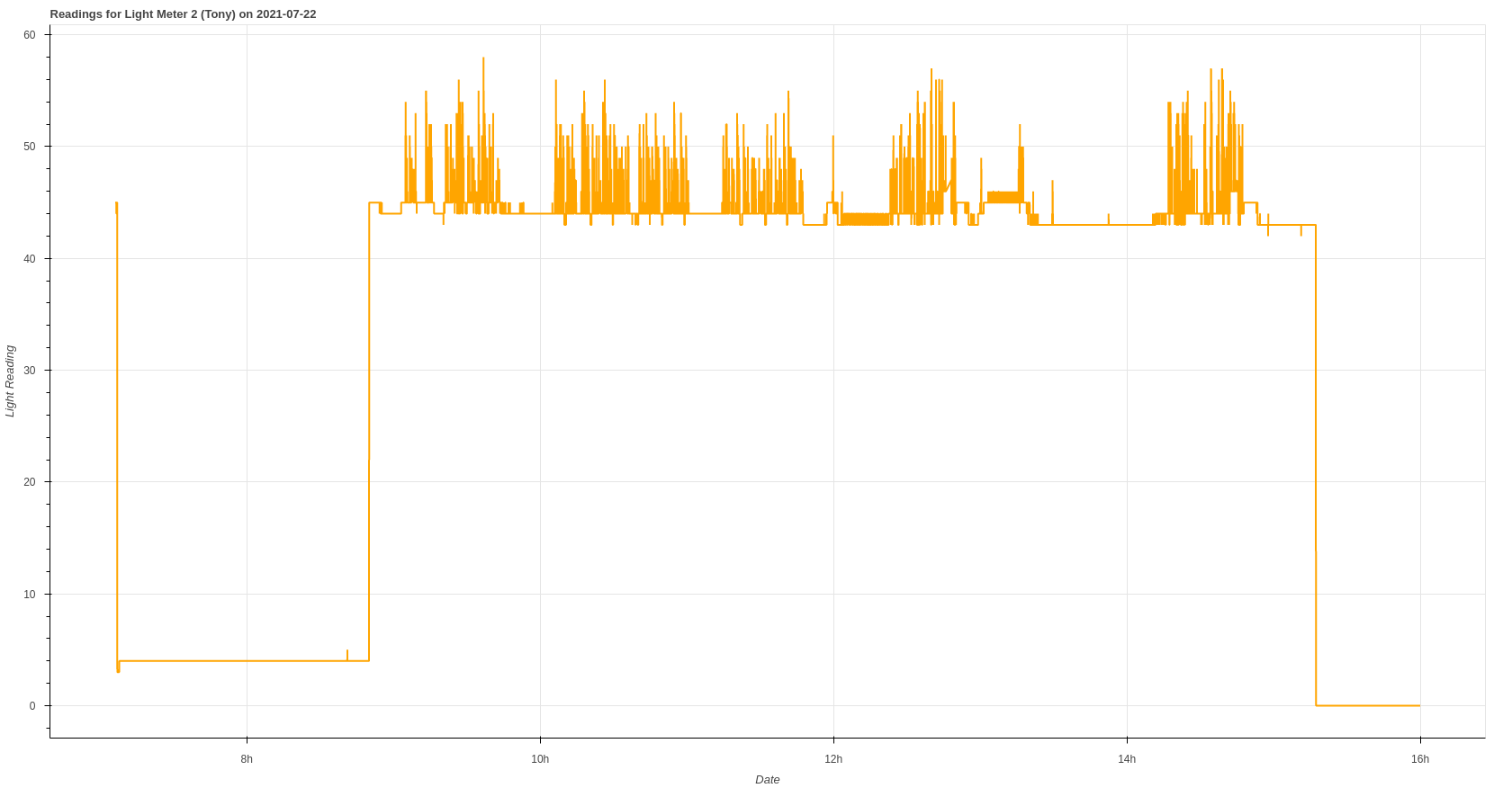

Examples/Screenshots

- docs (For more information on the types of charts produced)

At the time of writing, the scripts in this repository produce over one-hundred

charts/files. So, here are a selection of the types of charts produced after

processing the data in the data/lm1-exhibiton-all.csv and

/data/lm2-exhibition-all.csv files.

Overview of the Personal Flash in Real-Time Artworks

Personal Flash in Real-Time consists of two artworks, named Personal Flash in Real-Time (Andy) and Personal Flash in Real-Time (Tony). Each one measured the light in the welding booths in the Ritherdon Factory and forwarded those readings on to a server running in Amazon's 'cloud' – see Amazon Web Services (AWS) for more information. While this was happening, two sets of lights, residing in Castlefield Gallery, would turn on and off whenever the system detected someone welding in Ritherdon. The would happen because the Relays, controlling the lights would receive the latest Light Meter readings taken in Ritherdon via the server (AWS).

The (AWS) server stored every reading taken in a SQLite database and this project pokes and prods at the data – to plot charts/graphs.

Design Notes and Trade-Off Decisions

- Essentially, this project is about taking the data from

data/lm1-exhibiton-all.csvanddata/lm2-exhibiton-all.csvand producing interactive charts for Nicola (the artist) to utilise how she sees fit. -

The

separator.shandtotalilator.shscripts split the .csv files, mentioned above, into smaller files in an attempt to make them (.csv files) easier to work with on average hardware.- On top of that,I have only committed the CSV files mentioned in point 1 to the repository as a means to reduce the clutter in the repositories Git commit history.

- You will need to split the CSV files up yourself after you have cloned the repository, using the scripts mentioned in point 2.

-

The database containing the actual data is not included with this repository.

- The database used for the No Gaps in the Line exhibition is approximately 500MB and I thought it was unreasonable to expect people to download and work with a repository of that size – for a repository of this nature.

- The data exported from the database contains the data between 2021-06-13 (13^th June, 2021) and 2021-08-01 (1^st August, 2021) for both Light Meters (the length of the exhibition): There is (not much) more (test) data in the database but the data selected/exported seemed the most appropriate decision.

- I chose to work with CSV files out of convenience more than anything else – it is the easiest format to export the data to from the SQlite database.

- I used Bash, Awk and Ripgrep (rg), also, out of convenience, they were already on my computer.

- I used Bokeh because I have already used it and it is the only thing I know which can create interactive charts as individual HTML files, which I can share with anyone not comfortable with computers.

- I used Python because of Bokeh.

- Overall, Nicola wants to work with the charts produced by this data so any decisions made should be in service to that end.

- I have taken a hard-coded approach to filenames with the code because the code is not the main objective here, the charts are; In other words, long-term flexibility and maintenance is not a concern here.

Set-Up and Using the Code

Open your terminal, making sure you are in the directory you want the repository cloned to.

The Bash (.sh) scripts need calling before the Python (.py) ones. You need to

process the lm1-exhibition-all.csv and lm2-exhibition-all.csv files first because

the Python (.py) scripts assumes certain files are already in the /data

directory.

cd <INSERT YOUR PATH OF CHOICE HERE...>

git clone https://git.abbether.net/return-to-ritherdon/ritherdon-charts.git

cd ritherdon-charts

# You need to split the two files in /data first...

./separator.sh

./totalilator.sh

# You should have new files and directories in /data now...

# Then create a Python virtual-environment (venv) to make the charts...

# I've named the one here 'venv' (the second one) and stored it in the root of

# this project's directory.

python3 -m venv venv

# Activate the virtual-environment...

source venv/bin/activate

# You should see '(venv)' in your (terminal) promt, for example,

# (venv) yourname@yourpc:~/local-dev/ritherdon-charts$

# Install Python dependencies/packages via pip...

pip install -r requirements.txt

When the packages have finished installed (via pip), you should be ready to

go. From there, you can simply call the Python (.py) scripts (from terminal with

the venv activated). For example,

# Make sure you are in the terminal with the virtual-environment activated...

python lm1-hourly-totals.py

# Output from script...

python daily-totals.py

# Output from script...

When you have finished, you will need to deactivate the virtual-environment. You

can do that by entering disable in your terminal. You should see the (venv)

part of your prompt removed.

# Before you disable your Python's virtual-environment (venv)...

# (venv) yourname@yourpc:~/local-dev/ritherdon-charts$

# Disable your Python venv.

disable

# After you have disabled your Python's virtual-environment (venv)...

# yourname@yourpc:~/local-dev/ritherdon-charts$From here, you can either write you own scripts to form new charts or just play with the CSV files in something like Microsoft Excel or Libre Office Calc.

Working with the Files/Data Produced After Running the Project's Bash Scripts

/datastores the CSV files and/outputstores the charts. Run./separator.shto get started.

When you clone the repository, you will find the /data directory will have the

following layout,

tree -L 1 datadata

├── lm1-exhibiton-all.csv # Approx. 60MB

└── lm2-exhibiton-all.csv # Approx. 96MB

0 directories, 2 files

This /data directory is responsible for storing the raw data (I.E. the CSV

files). The charts, created via the Python (.py) scripts, reside in the

/output directory.

The /output directory should not exist until you run ./separator.sh.

After you run the Bash scripts (./seperator.sh and ./totalilator.sh), you should see

something like the following in the /data directory,

tree -L 2 data data

├── light-meter-1

│ ├── 2021-06-13 # Directory of readings taken per hour taken on 13/06/2021.

│ ├── 2021-06-13.csv # File containing all the reading taken on 13th June 2021.

│ ├── 2021-06-14 # Directory of readings taken per hour taken on 14/06/2021.

│ ├── 2021-06-14.csv # File containing all the reading taken on 14th June 2021.

# More files and folder here...

│ ├── 2021-07-30 # Directory of readings taken per hour taken on 30/07/2021

│ └── 2021-07-30.csv # File containing all the reading taken on 30th July 2021.

│

├── light-meter-1-daily-totals.csv # Total number of readings recorded for each day.

├── light-meter-1-hourly-totals

│ ├── 2021-06 # Directory containing files with hourly totals (per day) for June.

│ └── 2021-07 # Directory containing files with hourly totals (per day) for July.

│

├── light-meter-2

│ ├── 2021-06-13 # Directory of readings taken per hour taken on 13/06/2021.

│ ├── 2021-06-13.csv # File containing all the reading taken on 13th June 2021.

│ ├── 2021-06-14 # Directory of readings taken per hour taken on 14/06/2021.

│ ├── 2021-06-14.csv # File containing all the reading taken on 14th June 2021.

# More files and folders here...

│ ├── 2021-07-30 # Directory of readings taken per hour taken on 30/07/2021.

│ └── 2021-07-30.csv # File containing all the reading taken on 30th July 2021.

│

├── light-meter-2-daily-totals.csv # Total number of readings recorded for each day.

├── light-meter-2-hourly-totals

│ ├── 2021-06 # Directory containing files with hourly totals (per day) for June.

│ └── 2021-07 # Directory containing files with hourly totals (per day) for July.

│

├── lm1-exhibiton-all.csv # Original file.

└── lm2-exhibiton-all.csv # Original file.

104 directories, 100 files

With the overview/top layer explained, now is a good time to expand on the

directories produced in /data/light-meter-1 and data/light-meter-2. As an

example, I will focus on the data/light-meter-1/2021-06-13 directory (line 3 in

code block above).

tree data/light-meter-1data/light-meter-1

├── 2021-06-13

│ ├── 2021-06-13--00.csv

│ ├── 2021-06-13--01.csv

│ ├── 2021-06-13--02.csv

│ ├── 2021-06-13--03.csv

│ ├── 2021-06-13--04.csv

│ ├── 2021-06-13--05.csv

│ ├── 2021-06-13--06.csv

│ ├── 2021-06-13--07.csv

│ ├── 2021-06-13--08.csv

│ ├── 2021-06-13--09.csv # All readings recorded between the hours of 09:00 and 10:00.

│ ├── 2021-06-13--10.csv

│ ├── 2021-06-13--11.csv

│ ├── 2021-06-13--12.csv

│ ├── 2021-06-13--13.csv

│ ├── 2021-06-13--14.csv

│ ├── 2021-06-13--15.csv

│ ├── 2021-06-13--16.csv

│ ├── 2021-06-13--17.csv # All readings recorded between the hours of 17:00 and 18:00.

│ ├── 2021-06-13--18.csv

│ ├── 2021-06-13--19.csv

│ ├── 2021-06-13--20.csv

│ ├── 2021-06-13--21.csv

│ ├── 2021-06-13--22.csv

│ ├── 2021-06-13--23.csv

│ └── 2021-06-13--24.csv

├── 2021-06-13.csv # All readings recorded on 2021-06-13 (13th June 2021).

# This file/directory pattern repeats for all dates upto end of July...

├── 2021-07-30

│ ├── 2021-07-30--00.csv

│ ├── 2021-07-30--01.csv # All readings recorded between the hours 01:00 and 02:00.

# Usual '2021-07-30--07' type files here...

└── 2021-07-30.csv # All readings recorded on 2021-07-30 (30th July 2021).

48 directories, 1248 files

If you have run ./seperator.sh, the /output directory should now exist in

the project's root directory – with nothing in it. This is where the charts

will go (or any other artefacts), after running the Python (.py) scripts. The

project's root directory should now look like the following,

tree -L 1.

├── assets

├── dailies-overlayed.py

├── dailies-side-by-side.py

├── daily-breakdowns.py

├── daily-totals.py

├── data # This should have a load of new files and directories...

├── day-to-day-comparisons.py

├── LICENSE

├── lm1-hourly-totals.py

├── lm2-hourly-totals.py

├── output # *NEW* This should now exist and charts go in here...

├── README.org

├── requirements.txt

├── separator.sh

├── sql-statements.sql

├── totalilator.sh

└── venv # See 'Set-Up and Using the Code' section if you do not see this...

4 directories, 13 files

From here, you can either create some charts with the Python (.py) scripts and

take a look at them in /output or open the CSV files in /data and inspect the

data.